Debugging Your Product Design: 10-Step Troubleshooting Guide

1: Have the Right Mental Attitude

Approach debugging as a problem-solving exercise. Visualise success to stay motivated despite pressure from stakeholders.

2: Gather Your Evidence and Take Notes

Avoid jumping to conclusions. Use spreadsheets to document symptoms meticulously, recognising that similar symptoms can stem from different bugs.

3: Reproduce the Problem

This is often the most challenging step due to the intermittent nature of bugs. Set up automated tests to consistently reproduce the issue without getting sidetracked.

4: Gather the Evidence Again

Observe without troubleshooting initially. Record data dispassionately and repeat tests for consistency.

5: Start with Simple Errors

Check for common issues like incorrect wiring or wrong voltage applications before delving deeper.

6: Break Down the Problem

Divide the project into smaller parts to isolate where the bug might be occurring.

7: Talk Through the Problem

Discussing with peers can often lead to breakthroughs as explaining the problem can reveal new insights.

8: Apply the Fix

Implement your solution based on gathered evidence and analysis.

9: Try and Break It Again

Test rigorously to ensure that the bug does not reappear under similar conditions.

10 ‘Disappearing’ Bugs Are Still There If Unfixed!

Remember – if a bug seems to vanish without explanation, it probably hasn’t been resolved properly and could return at an inopportune moment.

Debugging is serious business. The cost of getting it wrong is ever present in the media.

Think of the 2016 Galaxy Note 7 fiasco, said to have cost Samsung around $17B to sort out[1]

How about the 2024 BMW braking system recall of near 1.5 million cars, with a financial impact of EUR 1 Billion (£0.84BN or $1.06BN)2 ?

And who can forget the July 2024 worldwide glitch in airport software, primarily caused by a faulty update? Initial estimates put the overall economic damage at between $10 billion and $15 billion.3

Bugs can be caused by a variety of reasons, from human error, faulty remote debugging, or incorrect data, through to bad models. Whatever the cause, troubleshooting product design bugs is a time-consuming and costly process, but an important one.

Indeed, the Pentium Principle of 1994 states: an intermittent technical problem may pose a *sustained and expensive* public relations problem.

- Have you ever had a project drag on for months with an intermittent bug?

- Have you been involved in a product recall due to a bug that has appeared in the field?

- Have you seen faults magically disappear and then 6 months later they reappear?

While technology and test equipment may have moved on immensely, the art of debugging remains more driven by experience than tools.

According to Antronics Owner, Andy Neil, before you even start the design, think about how you will debug your device, i.e. what facilities will you provide to make it debuggable?

For example:

* Access to the processor’s debug interface

* A UART (or similar) for diagnostic trace output

* A LED (or LEDs) to give status indications

* Testpoints to access key signals

* Some uncommitted IOs for test purposes

* Software support for state/event logging

Most importantly, if you don’t have sufficient resources to dedicate 10% to diagnostics, then you just don’t have enough resources.

You can follow our 10 Step Guide on how to approach a Debug below; or watch the video where I explain the guide debugging process in more detail.

Step 1: Have the right mental attitude

It’s no secret that bugs can be infuriating. This is only made worse by pressure from stakeholders, especially when it can be very hard to predict a conclusion date.

But debugging can be hugely rewarding, especially when you treat it as an exercise in problem-solving. Before you begin, the main thing to remember is to picture success!

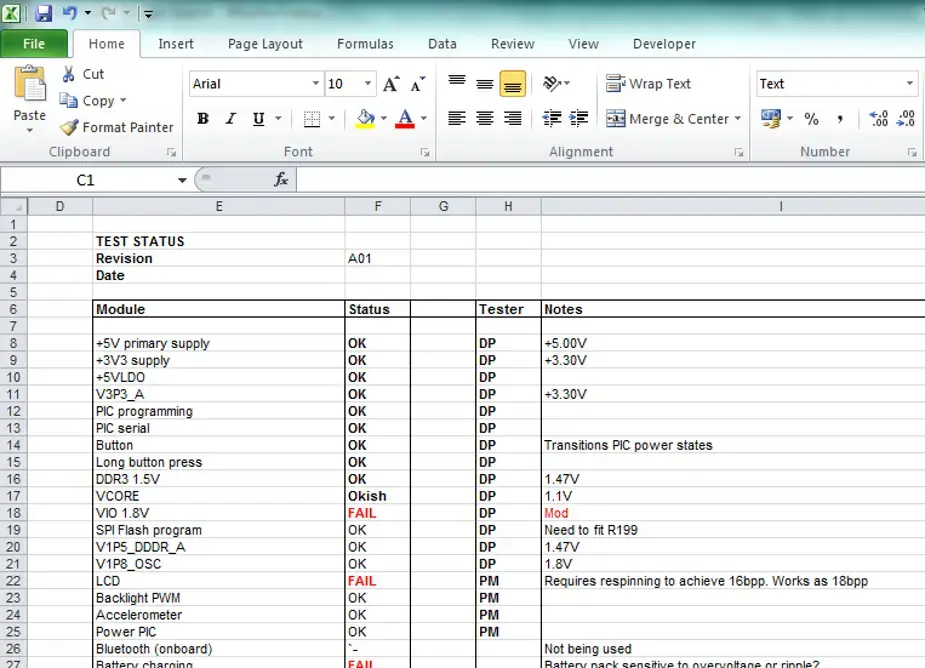

Step 2: Documentation - gather your evidence and take notes

Particularly when troubleshooting a complex bug, it is imperative not to jump to conclusions, even when the answer initially appears obvious.

Notebooks are always good, but spreadsheets are even better.

But be warned, different bugs can present the same symptom, which can produce confusing and contradictory answers.

When gathering evidence, if symptoms are even marginally different, still generate separate bugs for each set of symptoms.

If later you happily find that they are the same bug, you can have the joy of closing off two bugs at once when all the rest of these steps have been gone through!

Step 3: Reproduce the problem

Arguably the hardest step to make.

Bugs can be intermittent and dependent on a plethora of factors, from environment or geography, through to attached equipment and user error.

Set up your test and automate, if necessary, but don’t get side tracked!

Observe and leave until later to fix it.

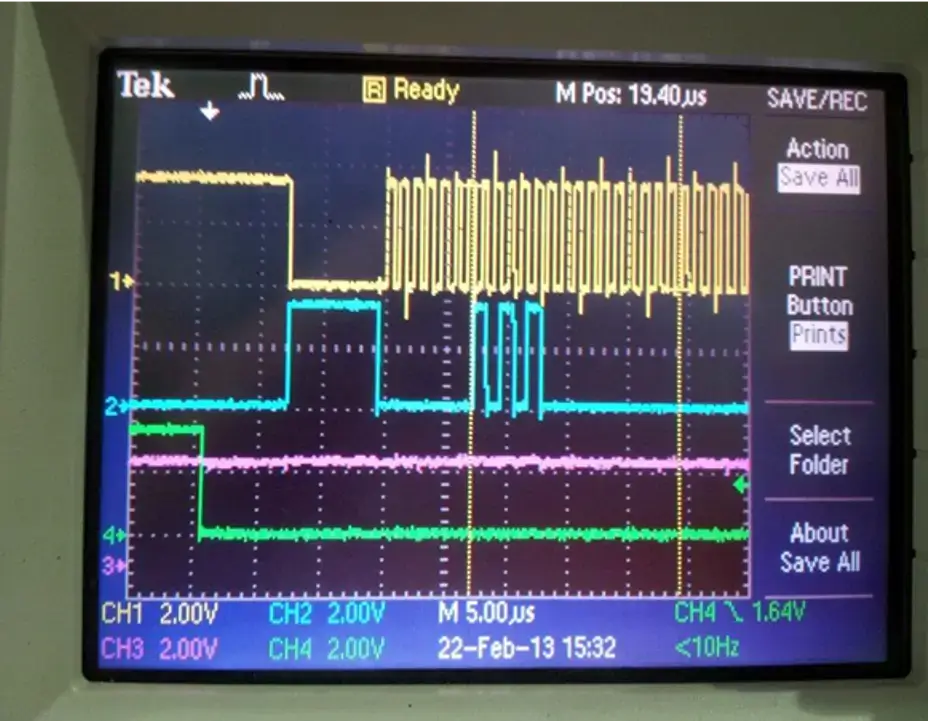

Step 4: Gather the evidence

At this stage, your role is to observe and make notes; most importantly, do not troubleshoot yet.

Look dispassionately at what happens when the bug occurs, record the data.

Then repeat, for consistency.

Step 5: Start with the simple errors

These make up a high proportion of all bugs.

Some examples include:

- Connectors wired incorrectly

- PCB build errors

- Wrong voltage applied to chips

- Clock running at the wrong frequency

- Pinout of chip is incorrect

Watch the Video - Debug Forensics [Debugging Guide]

Step 6: Breakdown the problem

Once the easier issues have been checked and debugged, then it is time to break the project into smaller parts.

For example, if you have used a DigiConnect board with a simple SPI ROM and that worked fine, you might next try the target board with it.

If this still all works fine, then your problem is likely the interface with the target board itself.

Step 7: Talk through the problem

It sounds simple, but once you have a soundboard, a lot of problems can become clearer.

The process of being asked the simplest of questions, can give you the lightbulb moment with a solution or new line of enquiry to follow.

According to Antronics Owner, Andy Neil, when your system is not behaving as intended, think about what the system is doing and ask yourself this: ‘if I wanted it to behave like this, how would I achieve that?’

Step 8: Apply the fix

Time to put that theory into practice!

Step 9: Try and break it again

The key here is to try and break it again in the way that you originally broke it.

That may sound like an obvious thing to say, but if you had to turn it on a thousand times to recreate the breakage, turning it on once or twice isn’t going to be good enough to identify the problem.

If you had a bug that occurred five times out of a hundred and the system doesn’t boot five times out of a hundred and you turn it on and it boots, there’s a 95% chance that you haven’t fixed the issue.

That just means it normally booted.

If you turn it on twice and boot it twice, you’re down to about a 90% probability you haven’t fixed the bug – it’s just luck that it’s worked both times and so on.

In the third test, it becomes 85%.

And, if we want to get to a 99% confidence interval or to be 99% confident that we fixed it, we want to say that 0.95 or the 5% failure rate that we had before, to the power of X, is less than 1%.

That’s where the log base comes in. A log base of 95 of 1% gives you 89.78.

Therefore, if I turn this system on and off 90 times, and it doesn’t fail once, then I’m 99% confident that I fixed the issue.

Step 10: ‘Disappearing’ bugs are still there if you haven’t fixed them

When bugs go away of their own accord, everyone breathes a sigh of relief.

However, if it happened once before, it will eventually happen again.

And if you haven’t fixed it, it will likely bite you at the worst possible moment. Most importantly, it probably means that step 3 (reproduce the problem), hasn’t been tried enough.

It cannot be over-emphasised how important replication is.

For example, if a bug occurs 5 in 100 times, then on the first test there is a 95% chance that it wasn’t fixed but just didn’t occur.

You need to make sure it hasn’t been fixed by repeating the test.

There are occasions, of course, when time is short and bugs are a little too persistent. If you find yourself or your engineers in that position there are debug experts, such as the ByteSnap team, you can call on to assist.

What about AI and Machine Learning for Debugging?

AI and machine learning are often hailed as game-changers in the debugging process, promising increased efficiency and precision.

However, it’s important to remember the complexities and nuances involved in debugging.

Privacy and Data Control: A primary concern around AI and debugging. AI systems require vast amounts of data to function effectively, which can pose significant privacy risks.

During debugging, sensitive data might be inadvertently accessed or misused, potentially leading to breaches of privacy regulations.

It’s essential to implement robust data protection measures, such as anonymisation and strict access controls, to safeguard individual privacy.

Algorithmic Bias: AI models are only as good as the data they’re trained on.

If this data contains biases, the AI system can perpetuate these biases, leading to unfair or discriminatory outcomes.

In debugging, this could mean that certain types of bugs are overlooked or misinterpreted due to biased training data.

Transparency and Explainability: AI systems often operate as “black boxes,” making it difficult to understand how they reach their conclusions.

This lack of transparency can be problematic in debugging, where understanding the root cause of an issue is crucial.

Without clear insights into how AI systems make decisions, there’s a risk that developers might blindly trust AI outputs without fully understanding them.

Developing explainable AI techniques is vital to ensure transparency and foster trust among stakeholders.

Accountability: Determining who is responsible for decisions made by AI systems is another significant challenge.

If an AI system fails to detect a critical bug or makes an erroneous decision during debugging, it’s best to have clear accountability structures in place.

Establishing robust governance frameworks can help manage these challenges effectively. While AI and machine learning offer promising benefits for debugging, they are not without their pitfalls.

It’s crucial to approach these technologies cautiously, to make sure that ethical considerations are addressed and that human oversight remains a key component of the debugging process.

Doing so positions you to harness the potential of AI while safeguarding against its risks.

Knowledge Panel - Debug FAQs

What's the best way to document intermittent bugs?

Use a combination of digital tools and traditional methods. Create a detailed log with timestamps, conditions, and symptoms. Consider using screen recording software to capture elusive issues in action.

What strategies can help when dealing with 'disappearing' bugs?

Develop a comprehensive test suite that simulates various conditions.

Implement logging mechanisms to capture system states before and after the bug occurs. Consider environmental factors like temperature or network conditions that might trigger the issue.

What are the potential benefits of using AI in debugging?

AI can potentially automate repetitive tasks, analyse large datasets quickly, and identify patterns that humans might miss.

However, it should be stressed that this approach must be treated with caution, as AI systems are only as good as their training data and may not understand the full context of a project.

Is AI ready to replace human developers in debugging processes?

No, AI is not ready to replace human developers in debugging.

While AI can be a useful tool, it lacks the contextual understanding, creativity, and critical thinking skills that human developers bring to the debugging process.

Human oversight remains essential for effective and responsible debugging.

How do I know when it's time to seek external help with debugging?

If you’ve exhausted internal resources, if the bug is causing significant delays or financial impact, or if you suspect the issue might be related to unfamiliar technologies, it’s time to consider external expertise. Look for specialists with experience in your specific domain.

Dunstan is a chartered electronics engineer who has been providing embedded systems design, production and consultancy to businesses around the world for over 30 years.

Dunstan graduated from Cambridge University with a degree in electronics engineering in 1992. After working in the industry for several years, he co-founded multi-award-winning electronics engineering consultancy ByteSnap Design in 2008. He then went on to launch international EV charging design consultancy Versinetic during the 2020 global lockdown.

An experienced conference speaker domestically and internationally, Dunstan covers several areas of electronics product development, including IoT, integrated software design and complex project management.

In his spare time, Dunstan enjoys hiking and astronomy.